It’s a fact that data fuels AI, but how you collect it makes all the difference. This blog will explore the best way to extract data: Is relying on APIs the best choice, or is web scraping more effective for AI training data?

AI models are built on data as their primary foundation. This data can include everything from recent political news articles and tweets about Zelenskyy and Trump to Reddit conversations, videos, images, and nearly anything else generated on the internet. The type of data you use depends on the AI model you’re building, like machine learning, supervised learning, unsupervised learning, Natural Language Processing (NLP), and deep learning.

But raw data alone isn’t enough to build these robust models. To train AI effectively, you need high volumes of relevant, well-structured data. High-quality training data is essential for developing an AI application that delivers accurate and reliable results.

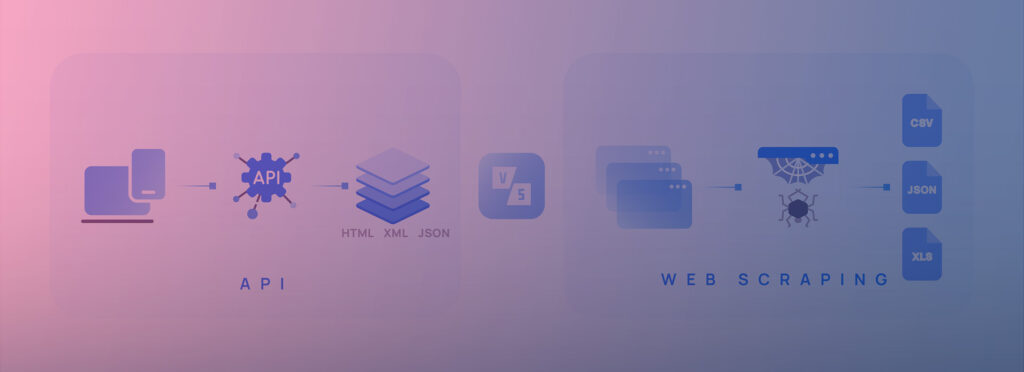

Now, getting high-quality data is another big challenge. There are two main paths you can follow for access to quality data at scale:

- APIs – When platforms offer structured data access but with limitations.

- Web Scraping – When you need full control and access to the raw data available on the web.

What are APIs and how do they work?

An Application Programming Interface (API) is a set of rules and protocols that allow different systems or applications to connect and communicate with each other efficiently.

APIs are the middleman that allow software to request and exchange data without needing direct access to the underlying database or code. Many platforms provide APIs to give developers direct access to structured data without manually extracting it from web pages.

Now, how do they work?

Think of an API as a bridge that enables seamless communication between two software applications. When a developer or user makes a request to a specific API endpoint, they send structured instructions specifying the action they want to perform and any necessary parameters.

Once the API receives the request, it processes the instructions, retrieves the requested data, and sends it back in a structured format—typically JSON or XML—so it can be easily integrated into the developer’s application. One thing to remember is that while APIs provide clean, structured, and ready-to-use data, they come with limitations. You can’t modify the data beyond what the API allows, and access may be restricted by rate limits, paywalls, or predefined data availability.

APIs for AI training data extraction

AI models rely on vast amounts of diverse, high-quality data, but collecting it efficiently is a challenge. APIs provide a structured way to extract data from social media platforms, e-commerce sites, financial databases, and other sources, making them a valuable tool for AI training.

Many platforms offer official APIs in JSON or XML formats, enabling access to key datasets:

- Reddit → Posts, comments, and user interactions for NLP models.

- GitHub → Code repositories and developer activity for AI-driven code suggestions.

- LinkedIn → Job postings for labor market analysis.

- OpenWeatherMap → Real-time meteorological data for predictive analytics.

These datasets are crucial for training AI models, large language models (LLMs), and machine learning algorithms. For example:

- E-commerce APIs help extract product details, prices, and customer reviews to train AI-powered chatbots and sentiment analysis models.

- Financial APIs provide real-time market data for AI-driven stock predictions and automated trading systems.

- Social media APIs enable NLP models to analyze public opinion, detect trends, and even generate AI-powered content.

Thus, APIs are excellent for obtaining structured data directly from the website that is ready for integration and use.

What is web scraping?

Web scraping is a method of automatically extracting data (text, images, videos, etc) from websites. Instead of manually copy-pasting information, developers write code to collect and structure data efficiently. You can literally get what you see on the web page by web scraping.

This is typically done using Python along with tools like Scrapy (a web scraping framework), BeautifulSoup (an HTML parser), and Puppeteer (a headless browser for scraping dynamic content).

The web scraping process involves:

- Sending an HTTP Request → The scraper sends requests to a web server.

- Downloading the HTML Content → The server responds with the page’s HTML, which contains the raw data.

- Parsing the Data → Using libraries like BeautifulSoup or lxml, the scraper extracts specific information from the HTML.

- Structuring the Extracted Data → Cleaning, formatting, and converting the data into structured formats like CSV, JSON, or XLS.

- Storing the Data → Saving the extracted datasets in databases for further analysis.

Web scraping services have made it easier for businesses to access real-time data from the web without needing coding expertise.

Specifically, Grepsr provides a fully managed data extraction service, delivering customized datasets in the exact volume and format clients require—eliminating the complexities of web scraping and data processing.

Learn more about how web scraped data is essential for AI training

A Side-by-Side Comparison

| Aspect | APIs | Web Scraping |

| Data Access | Provides structured data as defined by the API provider, often with limited data access. | Allows extraction of any publicly available data on a website, offering more flexibility. |

| Integration | Easier to integrate with well-documented endpoints and structured formats like JSON or XML. | Requires parsing HTML, handling dynamic content, and adapting to website structure changes. |

| Rate Limits & Restrictions | Often has rate limits, access restrictions, or paid tiers that limit the amount of data retrievable. | No limits, but excessive scraping and strain on the website triggers anti-scraping mechanisms or IP bans. |

| Data Volume & Scalability | Limited flexibility and control, along with API rate restrictions and limited availability of data. | Can collect large-scale data without restrictions. Web scraping services even bypass anti-scraping mechanisms easily. |

| Cost Implications | Costs are tiered and rise significantly as your data requirements and volume increase. | Cost-effective because you can access a high volume of quality data at the frequency you prefer at a low cost. |

| Legal & Ethical Considerations | Completely legal and aligns with platform policies. | Might raise legal concerns if the scraper violates the website’s terms of service and robots.txt. |

Learn more in detail

Which one is the best for collecting AI training data?

The right choice depends on your requirements, but if you need real-time access, large-scale data collection, and a cost-effective solution, web scraping is the better option.

Unlike APIs, web scraping can:

✔ Navigate multiple pages and extract data from different sources.

✔️ Handle various formats and capture dynamic content.

✔ Track historical data—essential for AI models that require trend analysis.

On the other hand, APIs come with major limitations:

- Rate limits restrict how much data you can access per minute or hour.

- Many APIs charge high fees for access to valuable data.

- Limited data access—API providers control what data they expose, often leaving out critical information.

- No historical data—most APIs only provide real-time updates, making long-term trend analysis difficult.

For example, a retailer can extract daily product prices from competitors and build a historical price database by web scraping. Whereas the website’s API only provides current prices.

But what if you could combine the structure of APIs with the flexibility of web scraping?

That’s where Web Scraping APIs come in—the best of both worlds.

Web Scraping APIs: The Best of Both Worlds?

Web Scraping APIs combine the flexibility of web scraping with the structured ease of APIs, offering a seamless way to extract data without dealing with the complexities of building a scraper from scratch.

How It Works:

Instead of manually scraping websites, businesses use Web Scraping APIs that automate the process.

These APIs handle everything—sending requests, parsing HTML, handling JavaScript rendering, avoiding bot detection, and structuring the extracted data.

Then, the output is delivered in an API format (e.g., JSON or XML), making it as easy to integrate as a standard API.

Why Web Scraping APIs Are the Best of Both Worlds:

✅ No coding required → Users don’t need to write scrapers or manage proxies.

☑️ Access to full website data → Unlike official APIs, scraping APIs extract everything visible on a webpage.

✅ Custom data delivery → Businesses can define the exact data points they need, ensuring relevant and structured output.

☑️ Scalability → They can handle large volumes of requests without worrying about rate limits or API restrictions.

Grepsr’s Web Scraping APIs take the hassle out of data extraction, delivering structured, high-quality data at scale—without the need to manage scrapers, proxies, or website challenges. Whether you need real-time updates or large-scale data feeds, our fully managed solution ensures seamless, efficient, and customizable data delivery.

Get the data you need—structured, formatted, and ready for seamless integration into your systems. Talk to our team today!